In this blog post, we will explore how we can take advantage of Ingress to expose applications, streamline traffic management, and enhance application security.

Table of Contents

Introduction

In Kubernetes, when we deploy applications and create corresponding services, by default these (Cluster IP services) are only accessible from within the cluster. To expose these services outside the cluster we have to create services of type Node Port, Load Balancer, or ExternalName.

| Service Type | Service Description |

|---|---|

| ClusterIP | Only accessible from within the Kubernetes cluster. |

| NodePort | Exposed on a static port on each node in the cluster. |

| LoadBalancer | Exposed on a load balancer managed by the cloud provider. |

| ExternalName | Maps a service to a DNS name. |

Ingress provides another way to expose services outside the cluster which is a very efficient and secure approach. Ingress is not a service in the same way that ClusterIP, NodePort, LoadBalancer, and ExternalName services are. These services are all Kubernetes resources that provide a way to expose your pods to the outside world. Ingress, is a custom resource in Kubernetes that provides a way to control how traffic is routed to your services.

What is Ingress and Ingress Controller?

Ingress works as a traffic controller which routes incoming requests to the appropriate services based on the rules defined. Using ingress resources we can efficiently expose our application services to the internet. Ingress becomes an entry point to the cluster, routing traffic to the correct backend service.

By default, there is no ingress controller in Kubernetes but it can be easily deployed. The Ingress controller typically runs as a separate pod within the cluster. The Ingress controller monitors the Ingress resources and when it detects any changes or new Ingress rules, it configures the necessary load balancers or reverse proxies to route traffic accordingly.

Why is Ingress Controller required?

Each LoadBalancer service in Kubernetes requires a Load Balancer resource with its own Public IP. It’s not possible to share a single LoadBalancer with multiple LoadBalancer services. With Ingress rules, you can use only one Load Balancer and still route traffic to multiple backend services. This is really useful in cloud environments where you don’t have to provision multiple Load Balancer resources.

What are the main benefits of using Ingress?

Ingress in Kubernetes provides a number of benefits, including:

| Layer 7 Load Balancing | Ingress allows you to define rules and configurations for routing external traffic to specific services within the cluster based on HTTP(S) rules. It acts as a layer 7 load balancer, distributing traffic to different services based on the requested host, path, or other HTTP parameters. |

| Centralized Configuration | Ingress provides a centralized configuration mechanism for managing external access to services. Instead of configuring separate load balancers or exposing each service individually, you can define routing rules and SSL/TLS termination in a single Ingress resource. |

| Path-Based Routing | Ingress supports path-based routing, allowing you to define different backend services based on the requested URL path. This enables you to create more advanced routing rules and split traffic among different services within the cluster based on the URL path. |

| Name-Based Virtual Hosting | With Ingress, you can configure name-based virtual hosting, where multiple domains or subdomains can be mapped to different backend services. This enables hosting multiple applications or microservices on a single cluster, each with its own domain or subdomain. |

| TLS Termination | Ingress can handle SSL/TLS termination, allowing you to terminate SSL/TLS connections at the edge of the cluster and forward unencrypted traffic to the backend services. This simplifies the management of SSL/TLS certificates and offloads the encryption/decryption process from the backend services. |

| Easier Service Exposure | Ingress simplifies the process of exposing services externally by providing a standardized way to define routing rules and manage external access. It abstracts away the complexity of setting up load balancers and routing configurations, making it easier to expose services to the outside world. |

Enabling the Ingress add-on in Minikube?

In this blog, we will use Minikube cluster to show the power of Ingress rules. It’s really easy to enable the ingress controller in Minikube.

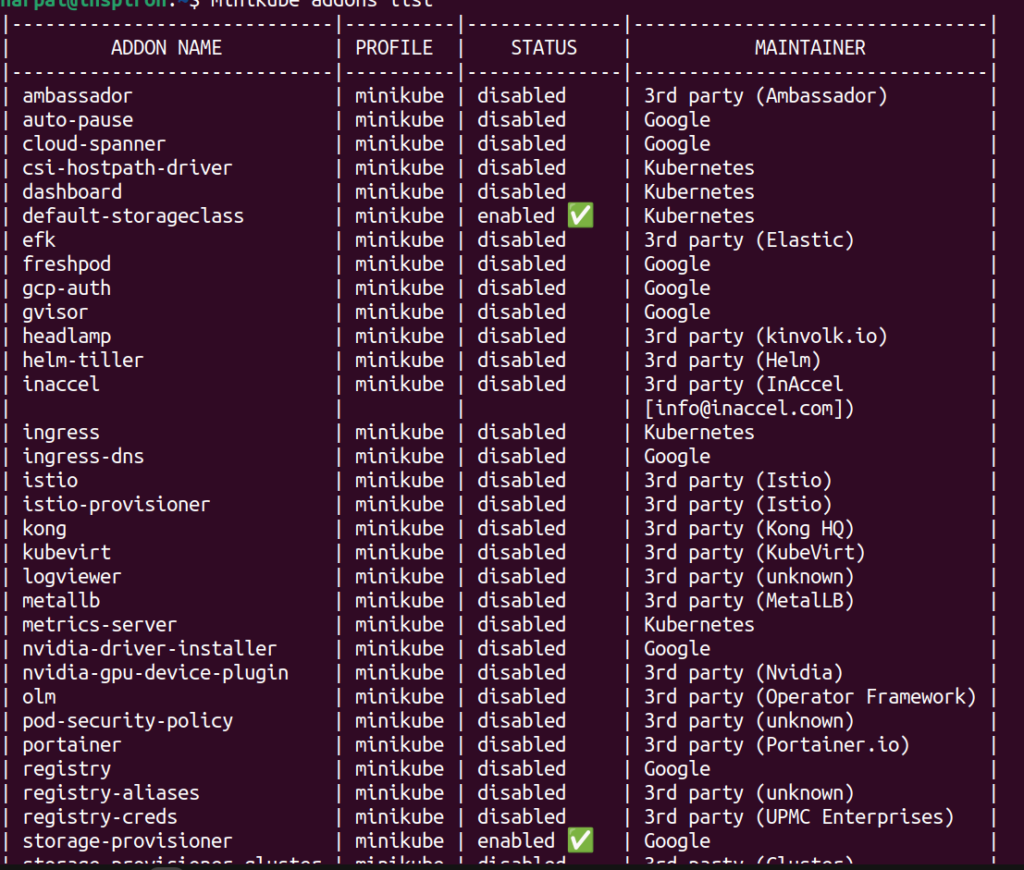

Check the status of currently enabled addons in Minikube using the below command:

minikube addons status

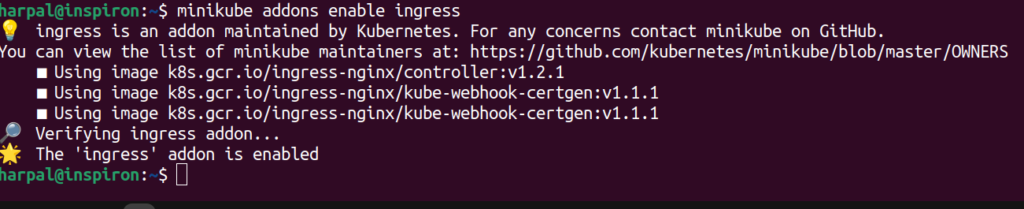

If the Ingress addon is shown as disabled then enable the Ingress addon using the below command:

minikube addons enable ingress

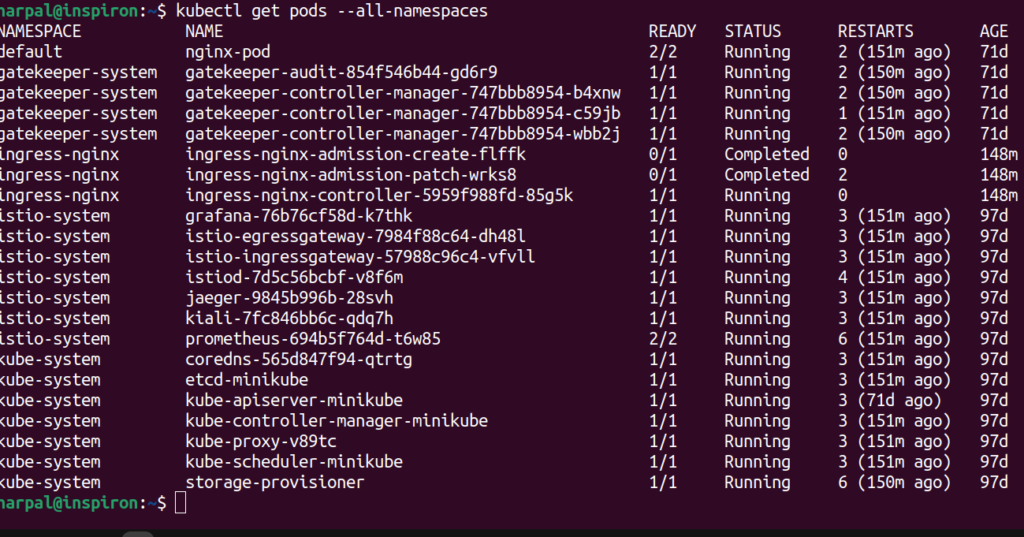

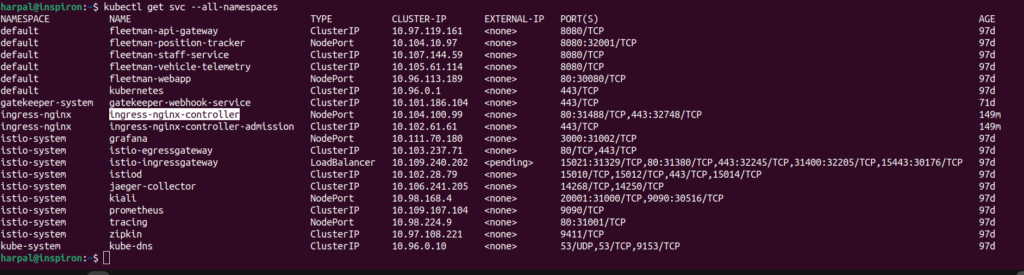

Let’s check all the pods in the cluster and see if any ingress controller pod is launched.

Let’s check if any service is also created for the Ingress controller.

When running on cloud providers, the address may take time to appear, because the Ingress controller provisions a load balancer behind the scenes.

Defining Ingress Rules: Ingress Resource and YAML Syntax

Ingress rules are defined as any other Kubernetes yaml manifest file. Let’s see how a simple Ingress manifest looks like by using the dry-run feature.

$ kubectl create ingress foo --rule="foo.com/=svc:port" --dry-run=client -oyaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: foo

spec:

rules:

- host: foo.com

http:

paths:

- backend:

service:

name: foosvc

port:

name: 80

path: /Manifest File

The above manifest defines an Ingress with a single rule, which makes sure all HTTP requests received by the Ingress controller, in which the host foo.com is requested, will be sent to the foosvc backend service on port 80.

How does Ingress work in Kubernetes?

When traffic hits an Ingress controller in Kubernetes, the following steps generally occur:

- Incoming Request: An external request, such as an HTTP or HTTPS request, arrives at the Ingress controller. This request is typically sent to the IP address or domain associated with the Ingress controller.

- Load Balancer (Optional): If a cloud provider’s load balancer is used in front of the cluster, the incoming request first reaches the load balancer. The load balancer then forwards the request to the Ingress controller. If there is no external load balancer, the request goes directly to the Ingress controller.

- Request Parsing: The Ingress controller receives the incoming request and parses it to extract information like the HTTP method, URL, headers, and other relevant details.

- Ingress Resource Lookup: The Ingress controller queries the Kubernetes API server to retrieve the Ingress resources that match the incoming request. It filters the Ingress resources based on the defined rules, such as hostnames or paths.

- Routing Decision: Based on the rules defined in the matched Ingress resources, the Ingress controller determines the appropriate backend service(s) to route the incoming request. The routing decision can be based on criteria like hostname, path, or headers.

- Load Balancing: If the backend service is replicated or has multiple instances, the Ingress controller performs load balancing to distribute the incoming traffic across those instances. Load balancing ensures scalability and fault tolerance.

- Request Forwarding: The Ingress controller forwards the incoming request to the selected backend service(s) based on the routing decision. It modifies the request as necessary, updating headers and preserving important information.

- Service Handling: The backend service(s) receive the forwarded request from the Ingress controller. The service(s) process the request and generate a response.

- Response Handling: The response from the backend service(s) is sent back to the Ingress controller.

- Response Return: The Ingress controller sends the response back to the original requester, completing the round trip.

How to access the backend service through Ingress?

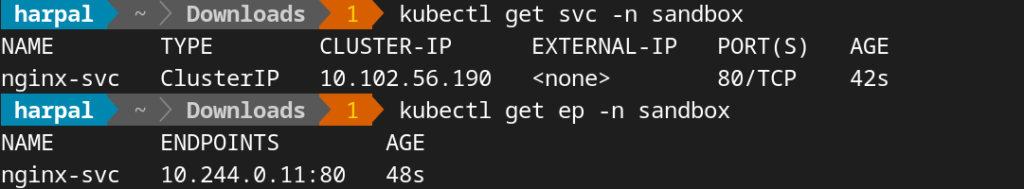

Let’s deploy the simple Nginx web application and expose it as a Cluster IP service. Then we will create an Ingress resource to expose this internal service and access it from outside the cluster.

kubectl create deployment nginx --image=nginx --port 80 -n sandboxkubectl expose deployments/nginx --name=nginx-svc --type=NodePort --port=80 -n sandbox

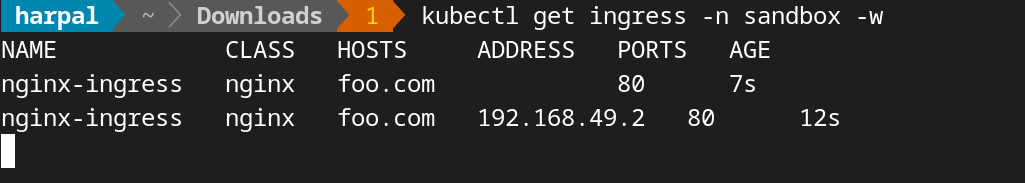

Let’s create Ingress rules to access this from outside the cluster:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

creationTimestamp: null

name: nginx-ingress

namespace: sandbox

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

rules:

- host: foo.com

http:

paths:

- backend:

service:

name: nginx-np

port:

number: 80

path: /

pathType: Prefix

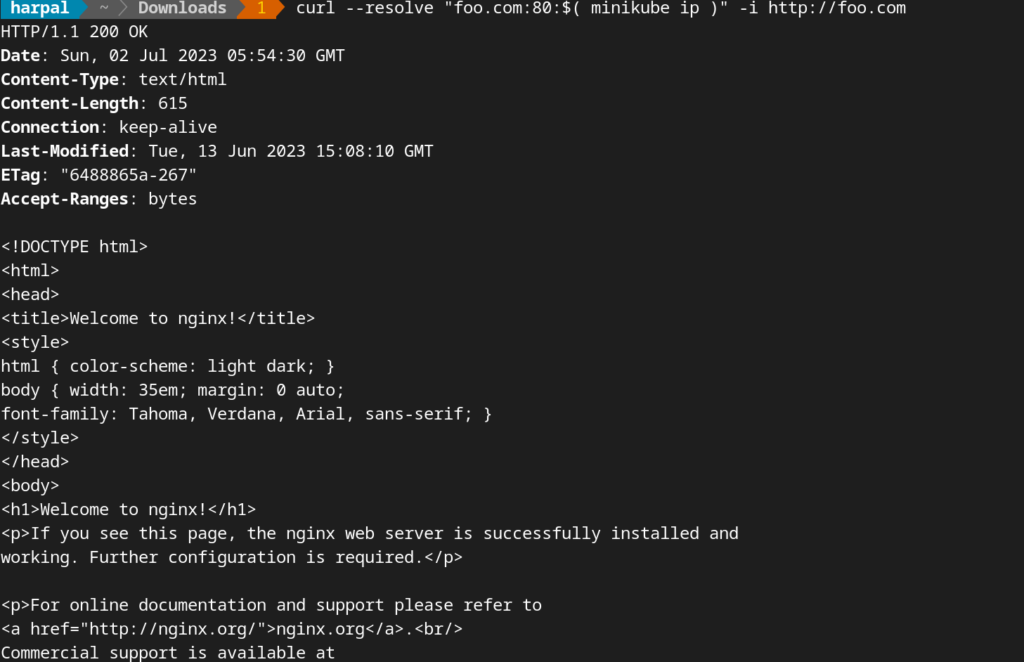

Verify that the Ingress controller is directing traffic:

curl --resolve "foo.com:80:$( minikube ip )" -i http://foo.com

Traffic Management: How can you expose multiple services through the same Ingress?

An Ingress can map multiple hosts and paths to multiple services.

kubectl create deployment apache --image=httpd --port 80 -n sandboxkubectl expose deployments/apache --name=apache-np --type=NodePort --port=80 -n sandboxIngress manifest with multiple backend services:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: multipath

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: foo.com

http:

paths:

- backend:

service:

name: nginx-np

port:

number: 80

path: /nginx

pathType: Prefix

- backend:

service:

name: apache-np

port:

number: 80

path: /apache

pathType: PrefixIn this case, requests will be sent to two different services, depending on the path in the requested URL. Clients can therefore reach two different services through a single IP address (that of the Ingress controller).

Optionally Look up the external IP address as reported by Minikube:

minikube ip

Add a line similar to the following one to the bottom of the /etc/hosts file on your computer (you will need administrator access):

192.168.49.2 foo.com

Now we can access the URL’s in the browser:

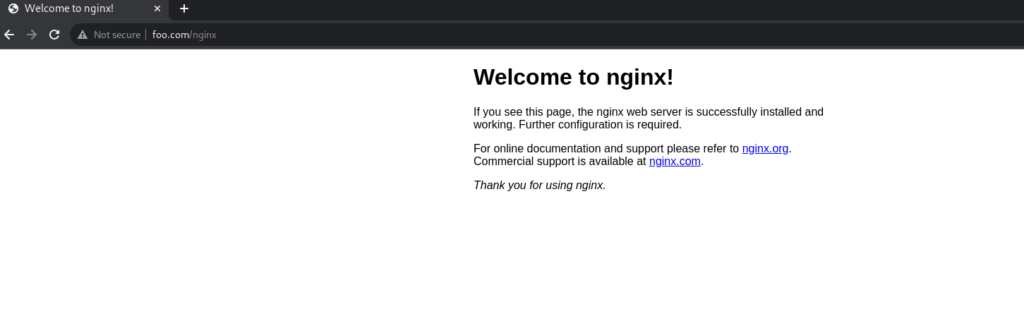

Nginx service:

Nginx Web App

Apache service:

Apache Web App

Securing Ingress Traffic: How to enable HTTPS using TLS Certificates?

The Ingress controller can terminate SSL traffic so the communication between the client and the Ingress controller is encrypted but the request forwarded to backend service pods are in plain text. For this to work you need to attach an SSL certificate and key in the Secret resource and refer to the details in the Ingress resource manifest.

Let’s generate a self-signed SSL certificate:

We will use openssl to generate our certificate and private key.

openssl genpkey -algorithm RSA -out private.key -pkeyopt rsa_keygen_bits:2048

openssl req -new -key private.key -out csr.pem

openssl req -new -key private.key -out csr.pem

Let’s create ssl certificate and key and store it in Secret object:

kubectl create secret tls tls-secret --cert=certificate.pem --key=private.keyNow we have to refer to the Secret object with tls cert and key in our Ingress manifest file.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: foo

spec:

tls:

- hosts:

- foo.example.com

secretName: tls-secret

rules:

- host: foo.example.com

http:

paths:

- path: /

backend:

serviceName: nginx-nodeport

servicePort: 80You can now use HTTPS to access your service through the Ingress:

curl -v https://foo.example.comSSL Passthrough: How to provide end-to-end encryption using Ingress?

If end-to-end encryption is required then you have to terminate SSL traffic in the backend pod. The responsibility to implement SSL passthrough is with the Ingress controller. As we are using the Nginx ingress controller in Minikube we will see how passthrough can be implemented when using the Nginx ingress controller.

In the case of the Nginx ingress controller, you add the annotation shown in the following listing.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: foo-ssl-passthrough

annotations:

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

spec:

...SSL passthrough support in the Nginx ingress controller isn’t enabled by default. To enable it, the controller must be started with the --enable-ssl-passthrough flag.

Popular Ingress Controllers

There are many popular ingress controllers in Kubernetes, but some of the most popular include:

| Nginx | It is a great choice for its performance and flexibility. It is also the most widely used ingress controller in Kubernetes. |

| Traefik | It is a good choice for its support for a variety of features, such as load balancing, TLS termination, and health checks. |

| HAProxy | This is a high-performance ingress controller that is known for its reliability. It is a good choice for applications that require high throughput and low latency. |

| Contour | This is an ingress controller that is built on top of Envoy. It is a good choice for applications that require advanced features, such as fault tolerance and traffic splitting. |

| Gloo | This is an ingress controller that is based on Envoy. It is a good choice for applications that require a high degree of customization. |

Best Practices for Ingress Usage

Here are some best practices for Ingress usage in Kubernetes:

- Use a Reliable and Well-Maintained Ingress Controller: Choose a widely used and actively maintained Ingress controller like Nginx Ingress, Traefik, or HAProxy Ingress. These controllers have robust features, good community support, and frequent updates.

- Define Clear and Concise Ingress Rules: Keep your Ingress rules simple and well-organized. Use meaningful hostnames, path-based routing, and annotations to specify the desired behavior for each rule.

- Use Secrets for SSL/TLS Certificates: Store SSL/TLS certificates in Kubernetes Secrets and reference them in the Ingress configuration. Avoid hardcoding sensitive information in the Ingress manifest.

- Enable SSL/TLS Encryption: Whenever possible, use SSL/TLS encryption for Ingress traffic. Terminate SSL/TLS at the Ingress controller and configure it to communicate with backend services over secure connections.

- Implement Access Controls: Use authentication mechanisms like OAuth, JWT, or basic authentication to restrict access to your Ingress endpoints. This ensures that only authorized users or systems can access your services.

- Monitor and Analyze Ingress Traffic: Set up monitoring and logging for your Ingress controller to track traffic patterns, identify performance issues, and detect anomalies. Use tools like Prometheus and Grafana to gain insights into your Ingress traffic.

- Implement Rate Limiting and DDoS Protection: Protect your Ingress endpoints from excessive traffic or DDoS attacks by implementing rate limiting and setting up additional security measures like IP whitelisting or blacklisting.

- Regularly Update and Patch Ingress Controllers: Keep your Ingress controller up to date with the latest versions and security patches. Regularly check for updates from the Ingress controller’s official repository and apply them as needed.

- Test Ingress Configurations: Validate your Ingress configurations using tools like kube-score or kubeval to catch any syntax errors or potential issues before applying them in production. Perform thorough testing to ensure proper routing and functionality.

- Backup and Restore Ingress Configurations: Backup your Ingress configurations to ensure you can recover them in case of accidental deletion or configuration issues. Regularly test the restoration process to verify its effectiveness.

Conclusion

Ingress rules offer a flexible and secure way to expose applications and manage traffic within Kubernetes clusters. In this blog post, we have explored the concepts, configuration, and best practices of using Ingress rules. By leveraging Ingress, you can simplify external access to services, optimize traffic management, and enhance security in your Kubernetes deployments. Implementing proper Ingress strategies will enable you to efficiently expose your applications while maintaining the desired level of control and security.

FAQs

Can I use Ingress to route traffic to services outside the cluster?

Ingress is primarily designed for routing traffic within the cluster. However, some Ingress controllers provide extensions or plugins that allow you to route traffic to services outside the cluster, such as external load balancers or other external endpoints.

Can I use Ingress for TCP or UDP traffic?

Ingress is primarily designed for HTTP(S) traffic. For TCP or UDP traffic, you may need to consider other solutions like Kubernetes Services of type LoadBalancer or using specialized controllers for TCP or UDP load balancing.

How does Ingress work in Kubernetes?

Ingress works by acting as a layer 7 load balancer and routing external traffic to the appropriate services within the cluster based on the defined rules in the Ingress resource.

How do I choose the right Ingress controller for my needs?

The right Ingress controller for your needs will depend on your specific requirements. If you need a simple Ingress controller with basic routing features, then a static Ingress controller may be sufficient. However, if you need a more flexible Ingress controller with advanced features, then a dynamic Ingress controller may be a better choice.

How do I troubleshoot Ingress problems?

Troubleshooting Ingress-related issues involves checking the Ingress configuration, validating DNS records, examining controller logs, and verifying that the backend services are correctly configured and reachable within the cluster.

Is ingress a namespace-scoped resource?

Yes, Ingress is a namespace-scoped resource in Kubernetes. This means that an Ingress resource is created within a specific namespace and can only route traffic to services within the same namespace.

Please read our articles in other sections: